What is Kubernetes?

Kubernetes, often referred to as “K8s,” is an open-source container orchestration system that automates the management of containerized applications. This guide will provide a simplified explanation of the core concepts and components of Kubernetes, including the cluster architecture, ETCD, the Kube API server, and more.

Core Concepts

Kubernetes is built on the concept of a cluster, which is a group of machines that work together to run containerized applications. Each machine in a cluster is called a node, and each node runs a set of processes, including the container runtime (e.g., Docker), the Kubernetes control plane, and application workloads.

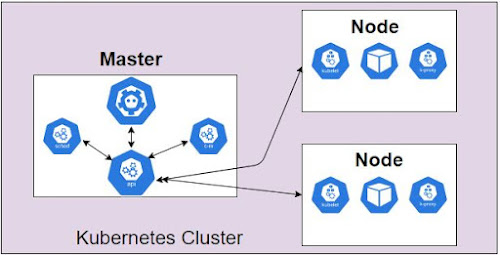

Cluster Architecture

A Kubernetes cluster is composed of a set of worker nodes and a set of control plane components. The worker nodes run the containerized applications, while the control plane components are responsible for managing the state of the cluster and the applications running on it. The control plane components include the etcd, the Kube API server, the Kube controller manager, the Kube scheduler, and the Kubelet.

ETCD in K8s

ETCD is a distributed key-value store that is used by Kubernetes to store the configuration data of the cluster. It stores the current state of the cluster, including the desired state of the applications, the current state of the nodes, and the current state of the control plane components.

Kube API Server

The Kube API server is the main point of communication between the control plane and the worker nodes. It exposes a RESTful API that can be used to create, read, update, and delete resources in the cluster, such as pods and services.

Kube-Controller Manager

The Kube-controller manager is responsible for maintaining the desired state of the cluster. It watches the state of the cluster and makes changes to the resources as needed to ensure that they match the desired state.

Kube-Scheduler

The Kube-scheduler is responsible for scheduling pods to run on the worker nodes. It uses a set of rules and policies to determine which node a pod should run on, based on factors such as available resources, location, and more.

Kubelet

The Kubelet is a process that runs on each worker node. It communicates with the Kube API server to receive instructions about which pods to run, and it communicates with the container runtime (e.g., Docker) to start and stop containers.

Kube Proxy

Kube proxy is a network proxy and load balancer that runs on each worker node. It handles network communication between the pods running on the node and the outside world.

Pod

A pod is the smallest and simplest unit in the Kubernetes object model. It represents a single container or a small group of tightly coupled containers that are deployed together on the same host.

Replica Sets

Replica Sets is a Kubernetes object that ensures a specified number of replicas of a pod are running at any given time. It is the next-generation version of Replication Controllers and it is used to create and manage the desired state of pods in the cluster.

Deployments

Deployments are a higher-level concept in Kubernetes that are built on top of Replica Sets. They are used to declaratively manage the desired state of pods and Replica Sets in the cluster.

Services (Cluster IP/ Load Balancer)

A service is an abstraction that defines a logical set of pods and a policy by which to access them. It can be exposed inside the cluster using a Cluster IP, or externally using a Load Balancer. Services provide a stable endpoint for pods, and they can be used to load balance traffic between pods.

Namespaces

Namespaces are a way to divide a cluster into multiple virtual clusters, each with their own resources and objects. This allows for better organization and management of resources in a cluster, and it also enables multiple teams to share a cluster without interfering with each other.

Imperative vs Declarative

Kubernetes can be used in both imperative and declarative ways. In imperative mode, you tell Kubernetes exactly what to do, for example, create a pod with these specific parameters. In declarative mode, you describe the desired state of the system, and Kubernetes takes care of making the necessary changes to achieve that state.

Manual Scheduling

By default, Kubernetes schedules pods automatically using the Kube scheduler. However, it is also possible to manually schedule pods to run on specific nodes using node selectors and/or pod anti-affinity rules. This can be useful in certain situations, such as when you want to separate certain types of workloads onto specific nodes.

Summary

In conclusion, Kubernetes is a powerful tool for managing containerized applications, and it provides a wide range of features for automating the scaling, deployment, and management of those applications. Understanding the core concepts, architecture, and components of Kubernetes is crucial for effectively utilizing this platform in production environments.